CockroachDB on Kubernetes

In this post i’ll show how to deploy CockroachDB to your cluster and configure then create a example application to demonstrate how CockroachDB doing well on node/pod failures.

First of all we need to install CockroachDB. In official documentation shows installing using Helm but hey we need to see those yamls right? To do that we will use statefulset yaml which cockroachdb provides its own repo. You can configure your db secure or insecure. Secure is creating certificates and use tls for connection between nodes. In this post we will follow secure mod (because tls thats why).Web UI CockroachDBFirst of all we need to install CockroachDB. In official documentation shows installing using Helm but hey we need to see those yamls right? To do that we will use statefulset yaml which cockroachdb provides its own repo. You can configure your db secure or insecure. Secure is creating certificates and use tls for connection between nodes. In this post we will follow secure mod (because tls thats why).

$kubectl apply -f https://raw.githubusercontent.com/cockroachdb/cockroach/master/cloud/kubernetes/cockroachdb-statefulset.yamlThis will create 3 replica of cockroachDB. But you will pods are pending in init status. Thats because its try to create certificate.

$ kubectl get po

NAME READY STATUS RESTARTS AGE

cockroachdb-0 0/1 Init:0/1 0 4m3s

cockroachdb-1 0/1 Init:0/1 0 4m2s

cockroachdb-3 0/1 Init:0/1 0 4m2s

Lets look one of them logs. As you can see we only see init-cert container logs because its trying to create certificiate

$ kubectl logs cockroachdb-0 -c init-certs

Request sent, waiting for approval. To approve, run ‘kubectl certificate approve default.node.cockroachdb-0’

2019-05-29 09:06:55.291231733 +0000 UTC m=+30.302293510: waiting for ‘kubectl certificate approve default.node.cockroachdb-0’

Lets approve this certificate with other pod as well. We can automate this of course.

$kubectl certificate approve default.node.cockroachdb-0

$kubectl certificate approve default.node.cockroachdb-1

$kubectl certificate approve default.node.cockroachdb-2

After this state we can our pods are running but readiness is failing for ‘HTTP 503’.

No worries because our installation is not finished yet.

We need to create job to start cockroachdb and thats create another certificate for default.client.root .

$ kubectl create -f cluster-init-secure.yaml

job.batch/cluster-init-secure created

$ kubectl certificate approve default.client.root

certificatesigningrequest.certificates.k8s.io/default.client.root approve

After this section you should see that pods are running and CockroachDB is running without problem.

As per documentation said we need to create client pod for accessing db. Basically we just need to ./cockroach sql –cert-dir=.. etc.

User is required for accessing UI. Lets create then.

$kubectl exec -it cockroachdb-client ./cockroach sql --certs-dir=/cockroach-certs --host=cockroachdb-public

./cockroach sql --certs-dir=/cockroach-certs --host=cockroachdb-public

Welcome to the cockroach SQL interface.

All statements must be terminated by a semicolon.

To exit: CTRL + D.

#

Server version: CockroachDB CCL v19.1.0 (x86_64-unknown-linux-gnu, built 2019/04/29 18:36:40, go1.11.6) (same version as client)

Cluster ID: c50f8709-00cb-4f80-a804-4961c36b97cb

#

Enter \? for a brief introduction.

$root@cockroachdb-public:26257/defaultdb> CREATE USER fahri WITH PASSWORD 'blog';

CREATE USER 1

Time: 238.920551ms

As you can see we created our first user on CockroachDB. Lets look at web ui with this user.

We can also create service,ingress etc but lets just select one pod for db

$kubectl port-forward cockroachdb-1 8080

and login to https://localhost:8080/

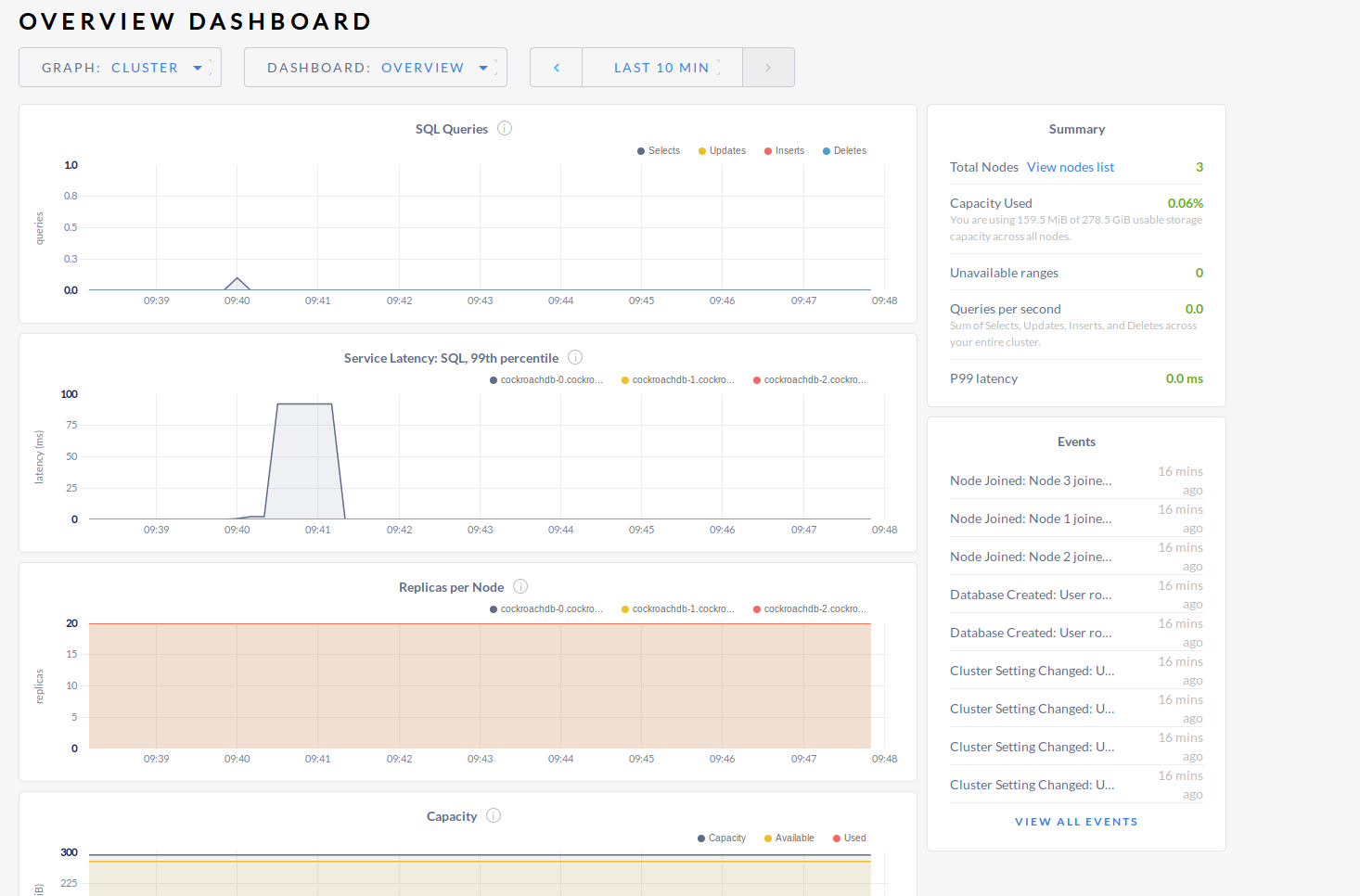

But most importantly we can see our cluster metrics nicely with latest events.

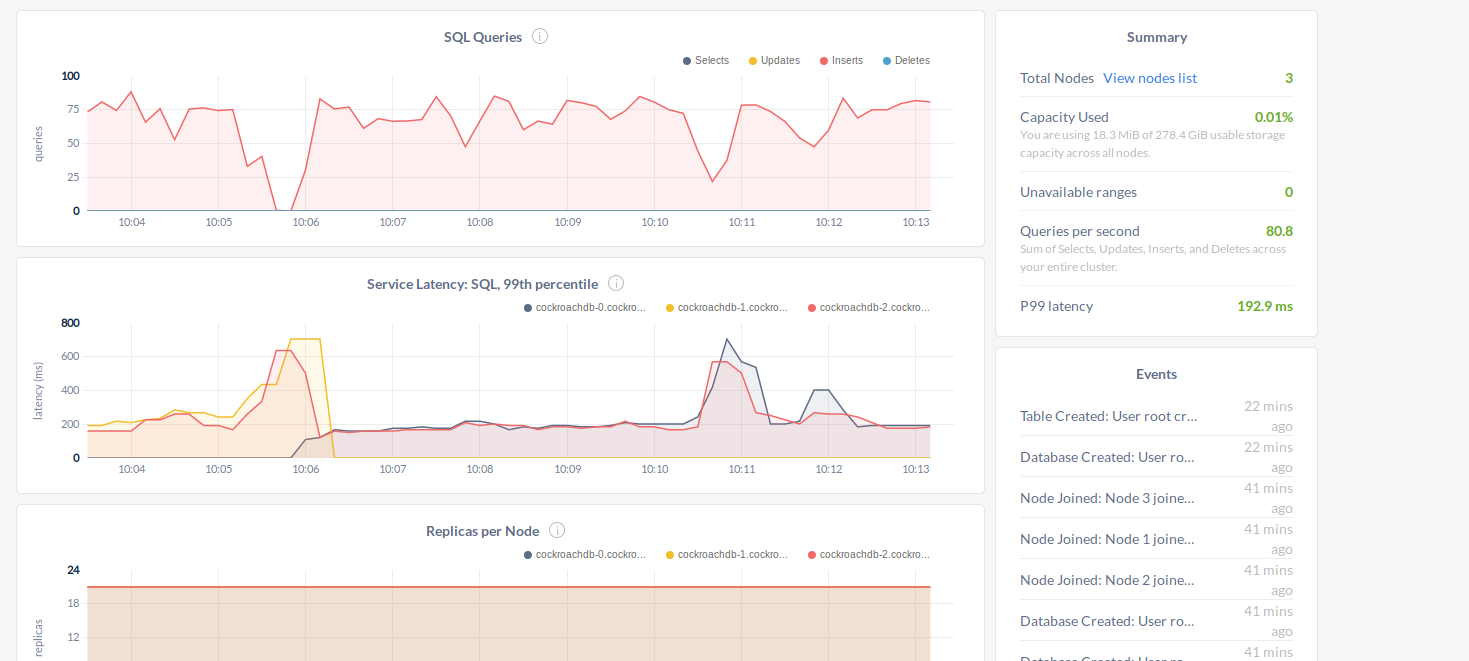

Lets create some load. You can access cockroach using Postgresql driver which is awesome for developing applications. For this purpose lets use loadgen-kv as cockroach repo shows.

As you can see we have created a simple load generator

Now lets delete one pod and see how its acting. Since this pods using persistent volume claims they can easily recover just for getting new data after the init.

$kubectl delete pod cockroachdb-0

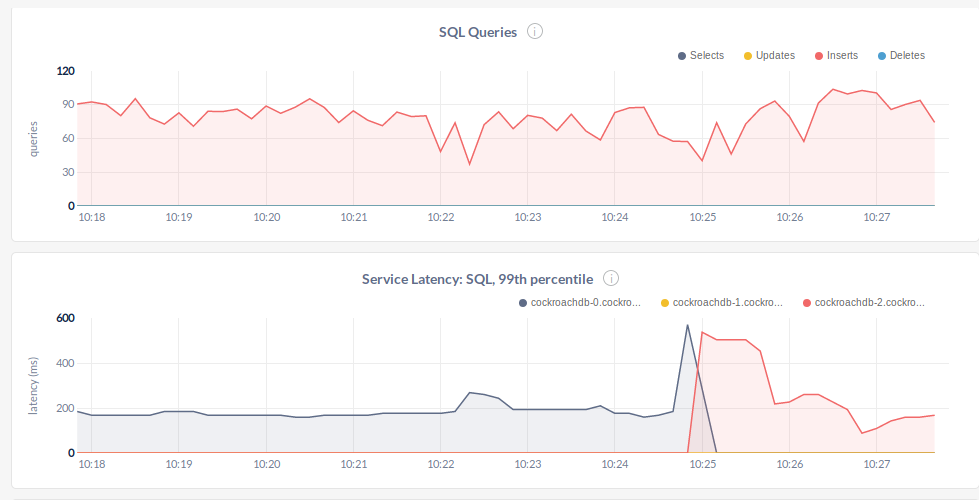

And observe how the requests handle.

As you can see our loadgenerator send request to our service. But when its connect to pod it always use same db. That’s same as you can only select one instance for postgresql driver (yes you can loadbalance them). As we deleted node-0 it will starts to starts to send request to node-2. And for a limited time latency is 3x than normal.

There is also a good stats page per sql query.

This page provides us to see is this query distrubuted, failed or latency…

Future Work:

- Make distrubuted query/load generator and do this example again?

- How will cluster handle if we have bigger query if one node is down ? Is this affect our nodes initialization time if so how long?